Crawler cycle time

The amount of time it is taking the crawler to try all hosts on the network once.

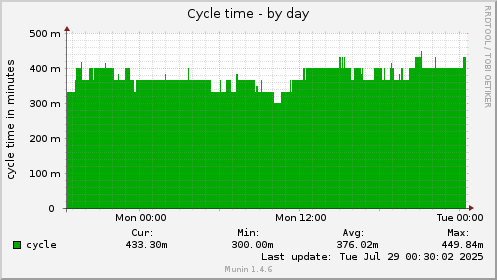

Day

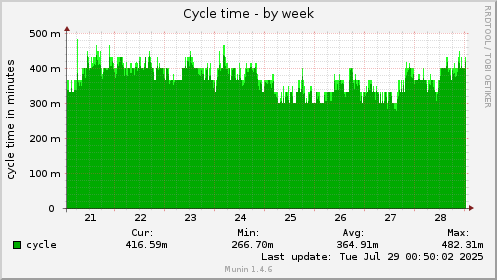

Week

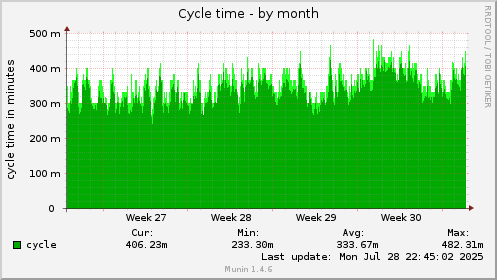

Month

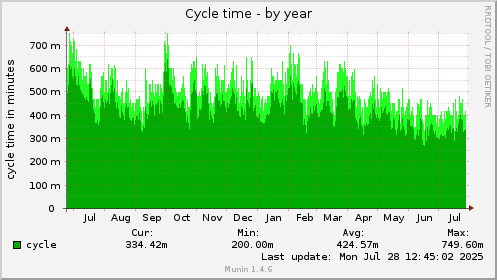

Year

Because the crawler crawls a fixed number of hubs every second this graph should be proportional to the number of hubs on the network and therefore have a similar oscillating shape as the number of hubs on the history page. So the thing to watch for is deviations from the pattern which indicates that the crawler hit a large number of hosts suddenly and is taking awhile to dig through them.

There are two main reasons that this will occur. The more common case is that the crawler has been offline for some amount of time. When it comes back online it will quickly become backlogged because it doesn't know that most hosts have gone offline and at the same time new hosts to try will come pouring in.

The second reason which has mostly been eliminated now is if the crawler falls into the Foxy network. This network is at least an order of magnitude larger than G2 and there are not enough resources available to crawl that many hosts. So the cycle time will continue to climb as the crawler gets backlogged with new hosts to try and never swings back around to try the previously tried hosts again.